Legacy systems continue to form the backbone of enterprise operations across industries, from financial institutions running decades-old COBOL mainframes to healthcare organizations maintaining critical patient management systems. However, these aging infrastructures present significant challenges, including mounting maintenance costs, security vulnerabilities, and limited scalability that hinder digital transformation efforts.

The imperative for legacy system migration has never been more urgent in today's rapidly evolving technological landscape. According to a Gartner report, by 2025, 40% of IT budgets will be dedicated to addressing technical debt caused by legacy systems. This financial burden drives organizations to seek modernization solutions that can preserve critical business logic while transitioning to contemporary platforms, prioritizing AI-driven system migration strategies.

Large Language Models (LLMs) have emerged as transformative tools in software modernization for legacy systems, offering unprecedented capabilities in code analysis, translation, and documentation generation. As of 2024, 63% of businesses are trialling generative AI for tasks like code generation and maintenance, demonstrating the growing confidence in LLMs for legacy modernization.

This article examines LLMs in enterprise system migration by automating

complex translation tasks, reducing risks, and accelerating the modernization

timeline. We explore practical applications, real-world case studies,

implementation strategies, and future trends that position Large Language

Models for legacy systems as essential tools for successful digital

transformation.

Understanding Legacy System Migration

Legacy system migration represents one of the most complex challenges in modern IT infrastructure management. Organizations worldwide grapple with aging systems that, while functionally critical, create barriers to innovation and operational efficiency and highlight challenges in legacy system migration.

Definition and Significance of Legacy System Migration

Legacy system migration involves transitioning from outdated software, hardware, or platforms to modern alternatives while preserving essential business functionality. This process encompasses data migration, application modernization, and infrastructure updates that enable organizations to leverage contemporary technologies as part of legacy system modernization with AI.

The significance extends beyond technical considerations to strategic business outcomes with benefits of LLMs in migration including enhanced security protocols, improved performance metrics, and integration capabilities that legacy systems cannot provide. Migration also addresses compliance requirements as regulatory frameworks evolve to mandate specific security and data protection standards.

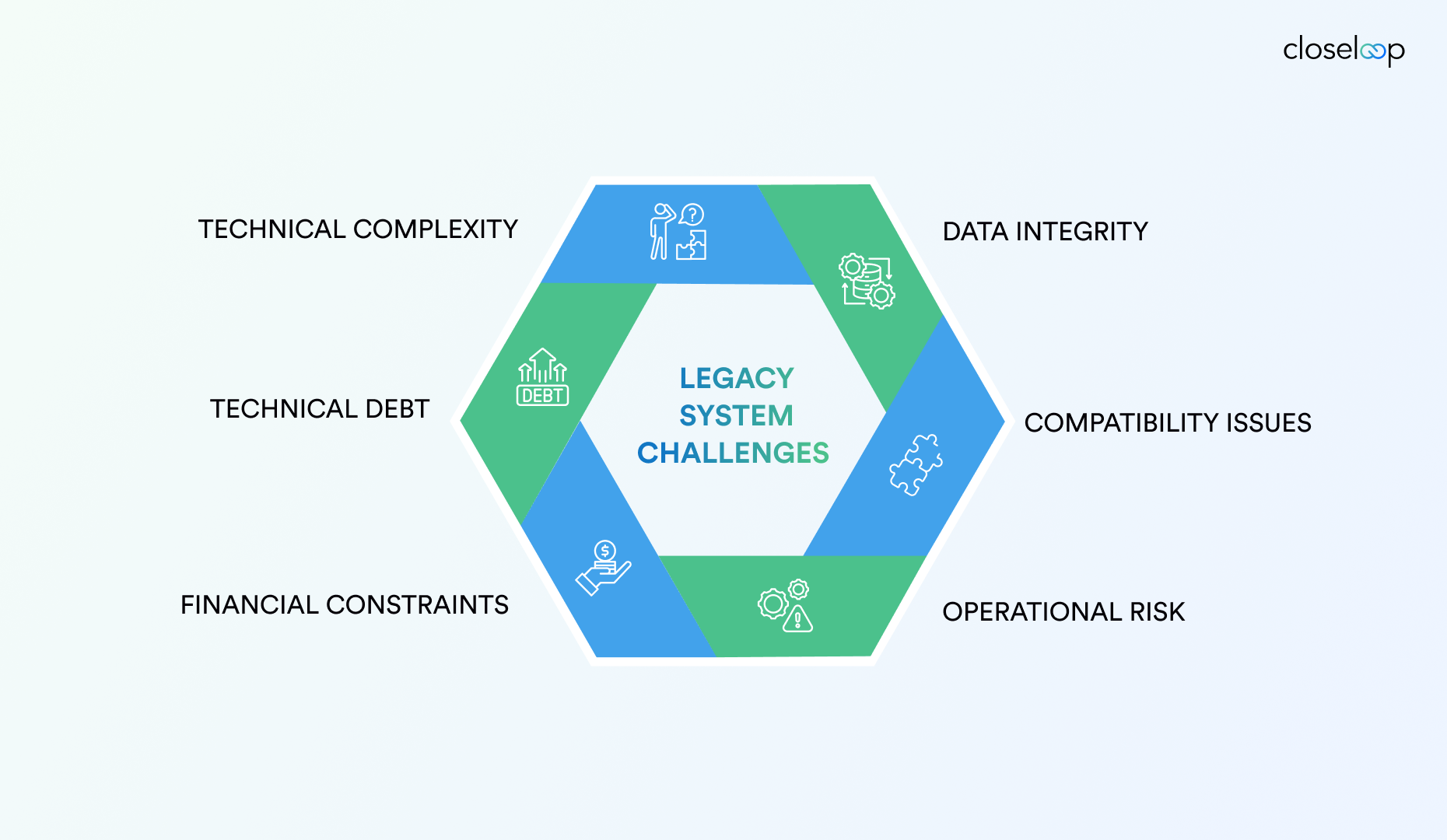

Common Challenges in Legacy Migration

|

Challenge Category |

Specific Issues |

Impact on Migration |

|

Technical Complexity |

Undocumented code, proprietary languages, interdependencies |

Extended timeline, increased costs |

|

Data Integrity |

Format incompatibilities, corruption risks, and validation requirements |

Risk of data loss, business disruption |

|

Compatibility |

API mismatches, protocol differences, integration failures |

System fragmentation, functionality gaps |

|

Operational Risk |

Downtime potential, rollback requirements, performance degradation |

Business continuity threats |

|

Financial Constraints |

Budget overruns, resource allocation, and ROI uncertainty |

Project delays, scope reduction |

|

Technical Debt |

Accumulated shortcuts, outdated practices, and maintenance burden |

Ongoing operational costs |

Legacy systems often contain millions of lines of code written in languages like COBOL, FORTRAN, or proprietary frameworks. Documentation is frequently incomplete or outdated, creating knowledge gaps that complicate migration efforts. The interdependencies between systems can create cascading effects where changes to one component impact multiple others, underscoring challenges in legacy system migration.

Traditional Approaches and Limitations

Conventional migration strategies include rehosting (lift and shift), replatforming (lift, tinker, and shift), refactoring (re-architecting), rebuilding (complete rewrite), and replacing (buy new solutions). Each approach involves manual processes that are time-consuming, error-prone, and resource-intensive compared to AI-driven system migration strategies.

Manual code analysis and translation require expert developers familiar with both legacy and target technologies. This expertise is increasingly scarce as older programming languages become obsolete. Traditional approaches also struggle with maintaining business logic consistency during translation, often resulting in functionality gaps or behavioral changes when migrating legacy systems with LLMs is not leveraged.

The Capabilities and Advantages of Large Language Models (LLMs)

Large Language Models represent a paradigm shift in automated software engineering tasks, bringing sophisticated natural language understanding to code analysis and generation. These AI integration systems have been trained on a vast corpora of programming languages, documentation, and software engineering best practices, enabling LLM applications in enterprise IT and intelligent automation for system migration.

What are LLMs and Their Architecture

LLMs are neural networks trained on massive datasets containing programming code, technical documentation, and natural language descriptions of software systems. Generative AI models like GPT-4, Claude, and specialized code-focused variants understand syntax, semantics, and patterns across multiple programming languages simultaneously.

The transformer architecture enables LLMs to process context spanning thousands of lines of code while maintaining awareness of relationships between different code sections. This contextual understanding allows them to recognize design patterns, identify functional equivalencies across languages, and generate coherent translations that preserve original intent in LLMs for legacy modernization.

Code Comprehension and Processing Capabilities

LLMs excel at parsing legacy code structures and extracting functional requirements from implementation details. They can identify deprecated APIs, recognize security vulnerabilities, and suggest modern equivalents for outdated programming patterns. This capability extends to understanding business logic embedded within procedural code and translating it into contemporary architectural patterns for LLMs in enterprise system migration.

The models can process multiple file formats, including source code, configuration files, database schemas, and documentation. They maintain awareness of dependencies and can trace data flow through complex system architectures, enabling AI-driven system migration strategies with more accurate and complete data migration planning.

Automation of Development Tasks

|

LLM Capability |

Legacy Migration Application |

Benefits |

|

Code Analysis |

Automated documentation generation, dependency mapping |

Reduces knowledge transfer time |

|

Language Translation |

COBOL to Java, Legacy C++ to modern frameworks |

Accelerates the conversion process |

|

Refactoring |

Code smell detection, optimization suggestions |

Improves code quality |

|

Test Generation |

Automated unit test creation, validation scripts |

Ensures functional integrity |

|

Documentation |

API documentation, system architecture diagrams |

Facilitates knowledge preservation |

LLMs can automatically detect code smells, suggest performance optimizations, and generate comprehensive test suites for migrated code, underscoring the benefits of LLMs in migration. They can also create detailed documentation that captures both technical implementation details and business logic reasoning, addressing one of the most significant challenges in legacy system maintenance.

Application of LLMs in Legacy System Migration

The practical application of LLMs in legacy migration scenarios demonstrates their potential to transform traditionally manual and error-prone processes into automated, consistent workflows for migrating legacy systems with LLMs. These applications span the entire migration lifecycle from initial analysis to post-migration optimization.

Code Comprehension and Automated Documentation Generation

Legacy systems often suffer from inadequate or outdated documentation, creating knowledge gaps that impede migration efforts. LLMs address this challenge by analyzing existing codebases and generating comprehensive documentation that captures both technical implementation details and business logic reasoning, a key role of LLMs in legacy system migration.

The automated documentation process involves parsing legacy code to identify functions, data structures, business rules, and system interfaces. LLMs can generate API documentation, system architecture diagrams, and user guides that facilitate knowledge transfer to development teams. This documentation serves as a foundation for migration planning and ongoing system maintenance.

LLMs can also create data warehouse migration guides that map legacy system features to modern equivalents, helping teams understand the functional requirements that must be preserved during migration. These guides include code snippets, configuration examples, and best practices for implementing similar functionality in target systems.

Code Translation and Migration Examples

Code translation represents one of the most compelling applications of LLMs in legacy migration. Generative AI can parse the code and can accelerate the conversion of frameworks and languages such as Cobol into Java, demonstrating practical value in real-world scenarios for LLMs in enterprise system migration.

Consider a legacy COBOL financial calculation module that processes loan interest calculations:

COMPUTE INTEREST-AMOUNT = PRINCIPAL-AMOUNT * INTEREST-RATE * LOAN-TERM / 100

LLMs can translate this into modern Java while preserving business logic:

BigDecimal interestAmount = principalAmount

.multiply(interestRate)

.multiply(BigDecimal.valueOf(loanTerm))

.divide(BigDecimal.valueOf(100), 2, RoundingMode.HALF_UP);

The translation maintains mathematical precision requirements while adopting modern language conventions and data types appropriate for financial calculations, showcasing how LLMs streamline legacy system migration.

Incremental Modernization Support

LLMs enable phased migration strategies by supporting incremental modernization approaches. Instead of requiring complete system rewrites, organizations can migrate individual components while maintaining integration with existing legacy systems, advancing legacy system modernization with AI.

This approach involves creating modern microservices that replicate legacy functionality while providing APIs for integration. LLMs can generate wrapper services that translate between legacy protocols and modern interfaces, enabling gradual system evolution without disrupting ongoing operations.

The incremental approach reduces migration risks by allowing thorough testing of individual components before full system cutover. LLMs support this process by generating integration tests, monitoring scripts, and rollback procedures that ensure system stability throughout the timeline of legacy to cloud migration, serving as intelligent automation for system migration.

Automated Test Generation and Validation

|

Test Type |

LLM-Generated Components |

Validation Benefits |

|

Unit Tests |

Function-level test cases, edge case scenarios |

Ensures component functionality |

|

Integration Tests |

API compatibility tests, data flow validation |

Verifies system interactions |

|

Performance Tests |

Load testing scripts, benchmark comparisons |

Maintains performance standards |

|

Regression Tests |

Business logic validation, output comparison |

Preserves critical functionality |

LLMs can analyze legacy system behavior and generate comprehensive test suites that validate migrated functionality against original specifications in LLM applications in enterprise IT. These tests include edge cases, error handling scenarios, and performance benchmarks that ensure migrated systems meet or exceed legacy system capabilities.

The automated test generation process involves examining legacy code paths, identifying critical business logic, and creating test scenarios that exercise all functionality. LLMs can also generate test data that mirrors production scenarios while maintaining data privacy requirements.

Case Studies and Industry Insights

Real-world implementations of LLM-assisted legacy migration provide valuable insights into practical benefits and implementation challenges. These case studies demonstrate measurable improvements in migration timelines, cost reduction, and quality outcomes and the benefits of LLMs in migration.

Healthcare EHR System Migration Success

A large healthcare organization successfully migrated a legacy Electronic Health Record (EHR) system written in MUMPS to a modern cloud-native architecture using LLM assistance. The project involved migrating over 2 million lines of code while maintaining HIPAA compliance and ensuring zero patient data loss.

The LLM-assisted approach reduced the migration timeline from 18 months to 8 months while achieving 99.7% functional equivalency between legacy and modern systems. Automated documentation generation created comprehensive API specifications that facilitated integration with third-party healthcare systems. The migration resulted in 40% improved system performance and 60% reduction in maintenance costs, illustrating LLMs for legacy modernization.

Comparative Analysis of Migration Outcomes

|

Metric |

Traditional Migration |

LLM-Assisted Migration |

Improvement |

|

Timeline |

12-24 months |

6-12 months |

50% reduction |

|

Code Quality |

70% accuracy |

90% accuracy |

20% improvement |

|

Documentation Coverage |

30% complete |

85% complete |

55% improvement |

|

Testing Coverage |

60% functional tests |

90% functional tests |

30% improvement |

|

Cost Efficiency |

Baseline |

35% cost reduction |

35% savings |

Customization and Retry Mechanisms

Successful LLM implementations incorporate domain-specific customization and iterative improvement mechanisms. Organizations fine-tune models on their specific legacy codebases and business logic patterns to improve translation accuracy and maintain consistent coding standards, a hallmark of AI-driven system migration strategies.

Retry mechanisms enable LLMs to learn from validation failures and refine their outputs. When automated tests identify functional discrepancies, the system can regenerate code sections with additional context and constraints until acceptable accuracy is achieved. This iterative approach significantly improves migration quality while reducing manual intervention requirements.

ALSO READ: DBRX by Databricks: An Open Source LLM Designed for Enterprise AI

Integration of LLMs with Existing Migration Strategies

LLMs complement rather than replace traditional migration methodologies, enhancing each approach with automated capabilities that reduce manual effort and improve outcomes in LLMs for legacy modernization. The integration creates hybrid workflows that leverage both human expertise and AI efficiency.

Complementing Traditional Migration Strategies

Rehosting Enhancement: LLMs automate infrastructure compatibility analysis and generate configuration scripts for cloud platforms. They can identify potential performance bottlenecks and suggest optimization strategies for legacy applications running in new environments.

Replatforming Acceleration: AI-powered analysis identifies platform-specific optimizations and generates migration scripts that leverage new platform capabilities. LLMs can automatically update database queries, API calls, and configuration files to align with target platform best practices.

Refactoring Intelligence: LLMs provide sophisticated code analysis that identifies refactoring opportunities, suggests architectural improvements, and generates modernized code that maintains functional equivalency while adopting contemporary design patterns when migrating legacy systems with LLMs.

Compatibility Checks and Integration Support

LLMs perform comprehensive compatibility analysis across technology stacks, identifying potential integration issues before they impact migration timelines in LLM applications in enterprise IT. They can analyze API compatibility, data format requirements, and protocol differences between legacy and target systems.

The integration support includes generating adapter layers, translation services, and middleware components that enable seamless communication between legacy and modern systems during transition periods. This capability is particularly valuable for organizations implementing phased strategies for LLMs in enterprise system migration and future of data engineering.

Risk Mitigation Through Enhanced Testing

|

Risk Category |

LLM Mitigation Strategy |

Implementation Method |

|

Functional Errors |

Comprehensive test generation |

Automated unit and integration test creation |

|

Performance Degradation |

Benchmark analysis |

Performance comparison and optimization suggestions |

|

Security Vulnerabilities |

Security audit automation |

Vulnerability detection and remediation recommendations |

|

Data Integrity |

Validation script generation |

Automated data consistency checks |

LLMs generate extensive test suites that validate both functional correctness and non-functional requirements such as performance, security, and reliability, reinforcing how LLMs streamline legacy system migration. These tests provide confidence in migration outcomes and enable rapid identification of issues requiring manual intervention.

Challenges and Limitations of Using LLMs for Legacy Migration

Despite their transformative potential, LLMs face several constraints that organizations must address to ensure successful legacy migration outcomes, reflecting challenges in legacy system migration. Understanding these limitations enables better planning and risk mitigation strategies.

Current Technological Constraints

LLMs operate within context length limitations that can restrict their ability to process extremely large legacy codebases comprehensively. While modern models can handle thousands of lines of code, enterprise systems containing millions of lines may require segmentation strategies that could miss subtle interdependencies.

The models also face challenges with highly domain-specific or proprietary languages where training data is limited. Legacy systems often use custom frameworks, proprietary APIs, or modified language implementations that deviate from standard syntax and semantics. These variations can lead to translation errors or incomplete functional coverage in LLM applications in enterprise IT.

ALSO READ: The Role of Cloud Computing in Legacy App Modernization

Risk of Inaccuracies and Verification Requirements

LLM-generated code requires human verification to ensure functional correctness and business logic preservation. While automation significantly reduces manual effort, expert oversight remains essential for validating critical system components and identifying subtle behavioral differences.

The verification process involves comprehensive testing, code review, and business logic validation that requires domain expertise. Organizations must establish validation frameworks that can systematically verify LLM outputs against legacy system behavior under various scenarios, including edge cases and error conditions when migrating legacy systems with LLMs.

Security and Ethical Considerations

Automated code transformation introduces security considerations that require careful evaluation. LLMs may inadvertently introduce vulnerabilities or fail to preserve security mechanisms present in legacy systems. Organizations must implement security auditing processes that validate the security posture of migrated systems as part of AI-driven system migration strategies.

Ethical considerations include ensuring that automated migration preserves accessibility features, maintains data privacy protections, and adheres to regulatory compliance requirements. LLMs must be guided by security and compliance frameworks that prevent the introduction of vulnerabilities during the migration process.

Domain-Specific Fine-tuning Requirements

|

Requirement Category |

Implementation Strategy |

Expected Outcome |

|

Industry Compliance |

Fine-tuning on regulatory code patterns |

Maintained compliance standards |

|

Business Logic Preservation |

Training on domain-specific algorithms |

Accurate functional translation |

|

Coding Standards |

Customization to organizational practices |

Consistent code quality |

|

Security Protocols |

Integration of security best practices |

Enhanced security posture |

Effective LLM implementation requires domain-specific fine-tuning that incorporates industry-specific coding patterns, compliance requirements, and business logic conventions, central to LLMs in enterprise system migration. This customization process involves training models on representative legacy codebases and validation against known correct translations.

ALSO READ: How to Overcome Common Challenges in Legacy App Modernization

The Role of Closeloop in Legacy System Migration

Closeloop specializes in comprehensive legacy system migrations that combine infrastructure modernization with application transformation. The company's holistic approach addresses the complete spectrum of migration challenges from data center consolidation to cloud-native application development, advancing AI-driven system migration strategies.

Their migration methodology encompasses infrastructure migration to modern cloud platforms, platform migration that modernizes underlying technology stacks, and comprehensive data center and database migration services. This integrated approach ensures that all system components are optimized for contemporary operational requirements while maintaining business continuity throughout the transition process with Large Language Models for legacy systems.

Closeloop's expertise delivers measurable business outcomes, including significant reductions in IT operational costs, enhanced disaster recovery capabilities, and improved operational efficiency metrics. Client organizations report increased system reliability, faster deployment cycles, and enhanced scalability that supports business growth objectives. Their methodology emphasizes minimizing disruption during migration while maximizing the strategic benefits of modernization investments, demonstrating the benefits of LLMs in migration.

The company integrates AI and LLM-assisted methodologies to enhance migration outcomes through automated code analysis, intelligent translation services, and comprehensive testing automation. This technology integration accelerates migration timelines while improving quality assurance processes. Their AI-augmented approach combines machine learning capabilities with human expertise to deliver migration solutions that preserve critical business logic while embracing modern architectural patterns and operational practices for migrating legacy systems with LLMs.

Emerging Trends and Future Outlook

The intersection of AI advancement and legacy application modernization continues to evolve rapidly, with new capabilities emerging that promise even greater automation and accuracy in migration processes, shaping the future of AI in legacy modernization. These trends indicate a future where LLM-assisted migration becomes the standard approach for enterprise modernization.

Advancements in LLM Capabilities

The future of code migration is AI-augmented, with LLMs playing a central role in end-to-end modernization pipelines. Current research focuses on improving contextual understanding of large codebases, enabling models to maintain awareness of complex interdependencies across million-line systems in LLMs for legacy modernization.

Emerging LLM architectures incorporate specialized training for code translation tasks, with models fine-tuned specifically for legacy language patterns and modern framework conventions. These specialized models demonstrate improved accuracy in preserving business logic while adopting contemporary coding practices and security standards, a clear role of LLMs in legacy system migration.

Multi-modal LLMs that can process code, documentation, and system diagrams simultaneously are enabling more comprehensive migration planning. These capabilities allow for holistic system understanding that considers not just code functionality but also architectural patterns, performance characteristics, and operational requirements in LLM applications in enterprise IT.

Automation and Reduced Manual Overhead

Future LLM capabilities will enable end-to-end migration automation that requires minimal human intervention for routine translation tasks, expanding intelligent automation for system migration. Advanced validation mechanisms will automatically verify functional equivalency and identify areas requiring expert review, streamlining the quality assurance process.

Intelligent project management features will enable LLMs to generate migration timelines, resource allocation recommendations, and risk mitigation strategies based on codebase analysis. This capability will help organizations better plan and execute migration projects with improved predictability and resource efficiency.

AI-Developer Collaboration Models

|

Collaboration Model |

AI Contribution |

Human Contribution |

Outcome Benefits |

|

Pair Programming |

Real-time translation suggestions |

Logic validation, architectural decisions |

Improved accuracy, faster development |

|

Review-Based |

Complete code generation |

Quality assurance, business logic verification |

Reduced manual coding, maintained quality |

|

Mentorship |

Best practice recommendations |

Domain expertise, strategic guidance |

Enhanced learning, better practices |

|

Continuous Integration |

Automated testing, deployment scripts |

Monitoring, exception handling |

Streamlined operations, rapid iteration |

The future involves sophisticated collaboration models where LLMs serve as intelligent assistants that augment human capabilities rather than replacing human judgment in LLMs in enterprise system migration. These partnerships leverage AI efficiency for routine tasks while preserving human oversight for critical decisions and creative problem-solving.

Security and Ethical AI Integration

Future LLM implementations will incorporate enhanced security frameworks that automatically validate code for common vulnerabilities and compliance requirements. These systems will include built-in ethical guidelines that ensure migration outcomes align with accessibility standards, data privacy requirements, and regulatory compliance mandates for legacy system modernization with AI.

Advanced verification mechanisms will enable organizations to maintain audit trails throughout the migration process, documenting all automated changes and providing transparency for compliance and governance requirements.

Conclusion

Large Language Models are fundamentally transforming legacy system migration by introducing unprecedented levels of automation, accuracy, and efficiency to traditionally manual processes, underscoring the role of LLMs in legacy system migration. A recent survey by IDC, conducted in January 2024, shows that 23% of organizations are now directing their budgets towards GenAI projects, indicating growing recognition of AI's potential in enterprise modernization.

The strategic implementation of LLMs in migration projects requires a careful balance between automation capabilities and human expertise. Organizations that successfully integrate AI-powered tools with domain knowledge and validation frameworks achieve superior outcomes in terms of timeline reduction, cost efficiency, and system quality when migrating legacy systems with LLMs.

The evidence demonstrates that LLM-augmented migration approaches deliver measurable benefits, including 50% timeline reductions, improved code quality metrics, and enhanced documentation coverage. These improvements translate into significant cost savings and reduced operational risks during migration projects, highlighting the benefits of LLMs in migration.

Organizations embarking on legacy modernization initiatives should seriously consider adopting LLM-augmented migration strategies that combine artificial intelligence capabilities with proven migration methodologies. This hybrid approach accelerates digital transformation while preserving critical business logic and ensuring system reliability throughout the transition process.

Explore Our Latest Articles

Stay abreast of what’s trending in the world of technology with our well-researched and curated articles

View More InsightsCost Breakdown to Build a Custom Logistics Software: Complete Guide

Global logistics is transforming faster than ever. Real-time visibility, automation, and AI...

Logistics Software Development Guide: Types, Features, Industry Solutions & Benefits

The logistics and transportation industry is evolving faster than ever. It’s no longer...

From Hurdle to Success: Conquering the Top 5 Cloud Adoption Challenges

Cloud adoption continues to accelerate across enterprises, yet significant barriers persist....

Gen AI for HR: Scaling Impact and Redefining the Workplace

The human resources landscape stands at a critical inflection point. Generative AI in HR has...