Gone are the days when AI was limited to experiments or isolated pilots. Now, it is part of daily operations, used by your teams across sales, finance, product, customer support, and IT, an everyday context that demands AI security thinking from the start. Models are being integrated into platforms that handle sensitive business data, often without a clear view of how they function or where the data goes, making this a practical AI security guide moment rather than a theoretical exercise.

This matters because the speed of AI adoption has outpaced the development of internal guardrails. Most companies don’t have a standard process to evaluate AI tools before they go live. In many cases, tools are added without involving security, procurement, or data teams. Once in place, they operate with access to internal systems, client data, and other confidential inputs, which is exactly where AI security for enterprises needs disciplined policies.

Unlike traditional software, AI systems rely on user prompts, third-party APIs, and dynamic content. They often generate responses based on past inputs, which creates risks if no one is monitoring that behavior, a core concern in AI model security and governance. Some store user data by default, while others share data back to the vendor. Most don’t explain this clearly upfront, creating tension at the intersection of data privacy and AI security.

At the same time, employees are getting more comfortable using AI to speed up their work. That includes feeding customer queries, revenue projections, or contracts into tools that may not be approved or secure. This creates exposure at the company level and highlights how to secure AI systems beyond traditional SaaS controls.

In 2025, companies are starting to realize that AI governance needs to catch up. Not just because of compliance, but because without controls, there is no way to track who’s using what, or how data is being handled. This leads to duplication, blind spots, and growing security risks, an opportunity to formalize an AI security framework for businesses.

If you are responsible for AI systems, infrastructure, or data protection, now is the time to get ahead of this shift. This blog focuses on what risks are emerging, where traditional security tools fall short, and how to build a plan that actually works, with AI security best practices that teams can implement immediately.

What’s Driving the Security Spike in AI

AI is no longer just in the hands of data scientists. Sales, finance, product, and customer support teams are all using it. LLMs are writing emails, summarizing documents, answering questions, and even generating approvals. Many of these tools are adopted without security reviews or cross-team coordination, amplifying AI security risks in enterprises. Most users treat them like everyday software, not systems that handle sensitive data.

The type of data being processed adds to the risk. Unlike earlier tools that relied on structured fields and clean inputs, AI thrives on messy content such as PDFs, chats, screenshots, presentations, and voice notes. These files often contain confidential material, but employees rarely stop to check. Without strong policies, sensitive information moves freely into tools that aren’t designed to protect it, an example of emerging threats in AI and machine learning security.

At the same time, internal experimentation is moving faster than the teams tasked with securing it. A support team might build a simple model to help sort tickets. A finance lead might use an internal tool to generate monthly summaries. A sales manager might test an AI writing assistant to speed up proposals. These tools are effective, but they’re often deployed without formal testing, documentation, or sign-off, exactly why enterprise AI security programs must create simple, enforceable guardrails. What starts as a small experiment quickly becomes a production tool with no oversight.

Most of these models connect to core systems through APIs. These integrations request access to inboxes, CRMs, calendars, and storage platforms. Permissions are broad and long-lasting. Few teams track what data is being shared, who it’s shared with, or where it ends up, gaps that modern AI security tools for enterprises are designed to close.

Meanwhile, oversight hasn’t caught up. By the time legal or IT gets involved, the tool is already active and connected to internal systems. No one has reviewed how it handles data, what it retains, or whether it aligns with internal policy. This lack of coordination leaves gaps that are hard to fix later and complicates AI security compliance for enterprise leaders.

The more these tools get embedded across teams, the harder it becomes to monitor risk. And the longer that visibility gap remains, the more exposed your organization becomes, underscoring the benefits of AI security solutions that provide centralized visibility.

Related Read: Do you need Chief AI Officer?

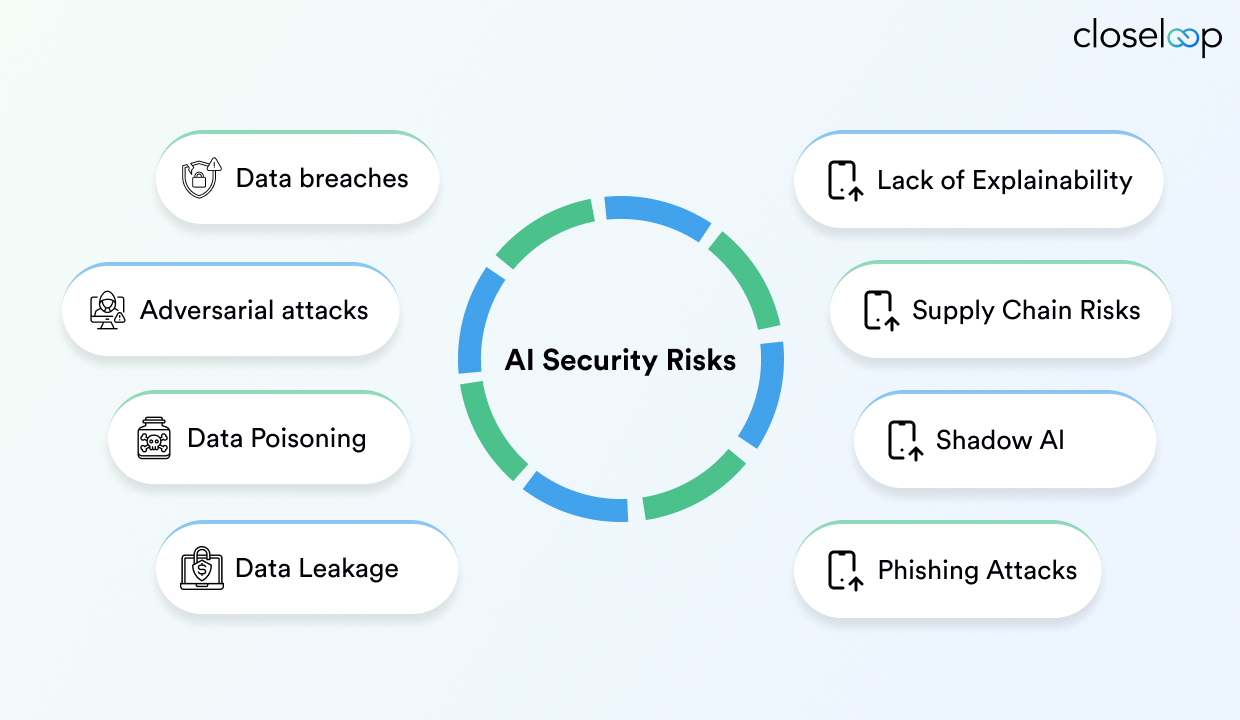

Top AI Security Risks in 2025

Most security conversations still focus on external threats. But in AI, many of the biggest risks come from how the tools are used internally. Here are the eight risks that are catching companies off guard this year.

1. Shadow AI

Employees often use AI tools on their own, without approvals, documentation, or review. A sales rep might paste pricing data into a chatbot to get a better pitch. A marketer might run campaign messaging through a free summarizer. These habits seem harmless but can expose customer records, internal IP, or confidential plans.

The challenge is that none of this activity is tracked. There’s no logging, no formal approval, and no review of what was shared. And once data leaves the environment, there’s no way to get it back.

2. Prompt Injection Attacks

Models don’t just follow logic; they respond to instructions in natural language. This opens the door to prompt injection attacks, where users manipulate input to change the model’s behavior.

For example, someone could ask an AI chatbot to ignore access rules or reveal hidden instructions. If the model isn’t secured against these patterns, it will comply, often without logging the request or warning the user. These patterns are well-documented threats in AI and machine learning security

3. Data Leakage

Some models store prompts and responses to improve accuracy. Others keep logs to support monitoring. In both cases, sensitive data can end up sitting in places it shouldn’t.

That information might resurface in future outputs or get shared back to vendors for retraining. Most users don’t know what’s being saved, how long it’s retained, or who can access it later. Managing retention and reuse is central to AI model security and governance

|

According to Stanford’s 2025 AI Index Report, AI incidents rose by 56.4% in just one year, with 233 reported cases across 2024. These included data breaches, system failures, and other events involving sensitive information. |

4. Model Misuse

AI models are flexible, but that doesn’t mean they’re suited for every task. Using a general-purpose model for legal advice, financial planning, or compliance workflows can create problems. The safer path is to apply role-based access and scenario testing drawn from AI security best practices

Outputs may sound correct but rely on outdated or shallow data. Decisions made using this content can trigger errors, complaints, or worse, regulatory exposure.

5. API Exposure

Most AI tools rely on third-party APIs to work. These APIs connect to file systems, calendars, emails, and CRMs. Many of these integrations request wide access but aren’t properly scoped. In most cases, no one is actively tracking what data is being pulled or how it’s used.

If an API isn’t throttled or logged, it can be used to extract large volumes of data without being noticed. This becomes especially risky when tools are connected to multiple systems at once. Proper scoping and monitoring are foundational in any AI security framework for businesses

6. Training Data Poisoning

AI models learn from the data they are fed. If that data is incorrect, manipulated, or biased, the model will reflect it in its behavior.

In open environments, bad actors can intentionally introduce false inputs. In closed systems, poorly labeled or unvetted data can still distort outcomes. Once that behavior is learned, it is hard to unwind without retraining.

7. Bias Amplification

Even without malicious intent, models often inherit bias from the datasets they are trained on. This leads to unfair responses, skewed recommendations, or inconsistent decisions, especially in hiring, lending, or support.

Bias may not be obvious until the damage is done. Left unchecked, it can affect brand perception, legal standing, and customer trust. Governance reviews and red-teaming are part of pragmatic AI security best practices

8. IP and Policy Violations

Many AI tools retain input data for analytics or retraining. This isn’t always clearly disclosed. If employees feed sensitive content into these tools, the company may end up violating internal security policies or client contracts.

Some vendors offer opt-outs or enterprise controls. But if no one is reviewing those settings, data can leave your systems without anyone realizing it.

|

According to Check Point Research AI Security Report 2025, 7.5% of all user prompts contain sensitive or private information. |

These risks don’t require a headline incident to matter. They show up in small ways like missed reviews, unclear prompts, and poorly scoped tools. To reduce exposure, companies need stronger oversight. That includes clear policies, tool inventories, prompt guidelines, and technical controls built for AI, not just legacy SaaS.

A unified approach to enterprise AI security restores visibility and control. Without them, AI becomes another blind spot in your stack.

Why Traditional AI Security Doesn’t Cut It Anymore

Most companies have strong security tools in place, including access controls, DLP, encryption, and vendor risk reviews. These work well for software that behaves in fixed, predictable ways. But AI doesn’t follow the same patterns. It learns, adapts, and responds based on data, context, and user inputs.

SaaS apps are designed around structured workflows, whereas AI tools work with loose inputs, open-ended prompts, and dynamic outputs. That’s where traditional controls start to fall short.

DLP Misses What Prompts Reveal

DLP (Data Loss Prevention) tools are good at scanning attachments, forms, and outgoing messages. But they don’t catch someone pasting a client contract into a chatbot. They don’t block employees from feeding revenue numbers into a summarizer. The data is still exposed, just in a way existing tools weren’t built to detect.

Even if the content is not stored, the act of processing it still creates risk. And most teams don’t realize how often this happens. This is a blind spot many AI security tools for enterprises now aim to cover.

|

If you are exploring secure, enterprise-grade chatbot solutions, this guide to AI chatbot development breaks down what it takes to build one that actually works in real business environments. |

Access Doesn’t Mean Control

You can lock down folders and limit user permissions. But that doesn’t apply once someone copies sensitive data and inputs it into a model. Traditional access controls don’t monitor prompts or track what information leaves the source system and enters a third-party tool.

Once it is in the model, you don’t always know how it is stored, reused, or surfaced later. Mapping prompt flows is core to how to secure AI systems that rely on third-party models

Logs Come Too Late

Audit logs are supposed to catch risky behavior. In practice, they tell you what happened after the fact. They rarely include the actual prompt, the output, or the model’s response logic. If the output contains sensitive information, there’s often no alert, only cleanup.

In AI workflows, reactive logging isn’t enough. Preventive guardrails are now a baseline AI security best practice.

Vendor Defaults Add More Risk

Many AI tools store user data to improve performance. These settings are often turned on by default. Unless someone checks during onboarding, your data could be retained, reused, or included in future model training without your knowledge, contrary to AI security compliance for enterprise leaders.

|

As per 2025 Data Risk Report, AI tools like ChatGPT and Microsoft Copilot were linked to millions of data loss incidents in 2024, including widespread leaks of social security numbers. |

Without custom enterprise settings, you lose control the moment input leaves your environment.

Protecting AI means securing the interaction. You need visibility into what goes in, what comes out, and what gets stored along the way.

What Enterprises Are Getting Wrong

Many companies approach AI like they would a pilot app or an analytics report, something to test, evaluate, and then move on from. But AI is not a one-time deployment. It is an evolving system that interacts with live data, changes behavior over time, and affects decisions across departments.

When AI is treated like a short-term initiative, the setup often skips critical design steps: no lifecycle planning, no scaling strategy, and no long-term oversight. That creates exposure, especially once the model is embedded in core workflows.

Too Much Trust in Vendor Defaults

There is a growing habit of relying on whatever security, privacy, and performance policies a vendor offers out of the box. While those settings may cover basic compliance, they rarely match the needs of a specific business or industry.

Without an internal AI security framework for businesses to guide vendor selection and configuration, teams end up accepting default behaviors such as storing prompts or retraining on user data, without realizing the risk.

Security Comes Too Late

In many cases, security reviews happen only after the tool is live. Once a business team is already using an AI assistant or workflow, it becomes harder to roll back. Procurement might approve the tool without a full risk review. IT could be left out of the architecture discussion entirely. Legal often hears about it only after it’s already in use.

By the time risk teams get involved, data has already been shared, and user habits are locked in. Early reviews reduce AI security risks in enterprises and speed approvals.

No Single Owner

AI systems rarely fall neatly under one department. Data teams handle development, business units drive usage, and security tries to set guardrails. Without clear ownership, there is no accountability for performance, safety, or compliance.

This leads to confusion over who maintains the model, updates training data, manages access, or tracks behavior across versions. Assigning ownership clarifies AI model security and governance across the lifecycle.

Missing Ground Rules

Many companies still lack basic operational standards for AI. There is no guidance on what data can be used, how to label inputs, when to validate output, or how to version updates. These steps are foundational for trust, consistency, and control.

Without these in place, every new AI use case introduces avoidable risk. And the more tools you add, the harder it becomes to manage. Ground rules operationalize AI security best practices without slowing delivery.

How to Rethink AI Security: 6 Fixes That Work

Most AI security risks aren’t caused by malicious attacks. They show up because of unclear policies, poor visibility, or blind trust in vendor defaults. These six actions can help reduce exposure, without slowing down AI adoption.

1. Inventory Everything

Start by listing out all the AI systems in use. That includes tools built in-house, third-party models, and embedded copilots inside CRMs or support platforms. For each one, document what it’s connected to, what data it uses, and who owns it.

This step is often skipped because tools are adopted at the team level. A live inventory is the first step in how to secure AI systems at scale. Without a central inventory, there is no way to track what’s running, where the risks are, or how many tools are using sensitive data.

2. Control Inputs and Outputs

Not all data should go into a model. And not all outputs should be shared without review. Set basic rules on what types of content are allowed like contracts, customer data, or financials, and flag anything that’s off-limits.

On the output side, watch for responses that include confidential data, hallucinations, or policy violations. If the model has access to internal systems, limit what it can summarize or extract. Input/output guardrails are foundational AI security best practices.

3. Restrict Access

AI access should be scoped by role and use case. A marketing assistant doesn’t need the same permissions as a product manager. A finance model shouldn’t be available to every team. And users shouldn’t be able to access training datasets unless they are part of the model development team.

Use identity management and access control systems to apply these rules consistently across tools and teams.

4. Version Your Models

Every model version should be tracked like software. Note what data it was trained on, when it was last updated, and what business context it was built for. This helps you identify where outputs came from if something goes wrong.

Versioning also creates accountability. If a model is making incorrect predictions or revealing sensitive content, you need to know which version caused the issue and whether it’s still in use. Versioning supports AI security compliance for enterprise leaders and audits.

5. Don’t Trust Default Settings

Review every vendor’s data handling and privacy policy. Many tools store prompts or share data with third parties unless you opt out. Others keep logs indefinitely or use your inputs to retrain their models.

Don’t assume the default settings are secure. Make sure your team is involved in reviewing each tool’s configuration before going live, and that you have control over how data is stored and used. This due diligence unlocks the benefits of AI security solutions without blocking adoption.

6. Create a Red Team

Build a small internal group to test your models like an attacker would. Ask them to trigger unwanted responses, bypass filters, or extract confidential content. See how the system behaves under stress or edge cases.

Red-teaming is now standard in AI security best practices and prepares for the future of AI security. This helps you identify gaps in prompt handling, permissions, and model behavior before someone outside your company does it first.

Security for AI needs to be active, not reactive. These six fixes give your teams a place to start, with controls that match how AI tools actually work.

What to Ask Your AI Vendors Now

Most vendors will mention that their platform is “secure” or “compliant.” But that doesn’t always mean your data is protected the way you expect. GenAI tools process and store information differently from traditional software. Unless you ask the right questions, you’re left guessing what happens behind the scenes.

Here are five questions that help uncover how your data is actually being handled and whether the tool fits your security requirements.

1. Where Does the Data Go After Input?

Get clarity on what happens immediately after someone enters a prompt. The answer should map cleanly to your AI security framework for businesses.

-

Does the data stay within your environment?

-

Is it passed to external servers?

-

Is it processed in real time, or stored for later use?

Ask for details on where the data physically resides, how long it’s retained, and what controls exist for deletion.

2. Is My Data Used for Model Training?

Many vendors train their models using customer inputs, especially in default or freemium versions. Even some enterprise tools keep data for fine-tuning unless you explicitly opt out. Clarify controls to satisfy AI security compliance for enterprise leaders.

Make sure your contract states whether your data will be used for training. If it is, confirm how it’s separated from other customers’ data and how it’s anonymized.

3. How Are Inputs Logged and Stored?

Some tools keep detailed logs of every user prompt. Others store prompt history in shared environments for debugging or analytics. You need to know who can see those logs, how they are protected, and whether they can be purged.

If logging can’t be disabled, ensure there are controls around encryption, redaction, and access. Look for encryption, retention, and purge options in AI security tools for enterprises.

4. Can I Audit Model Behavior Over Time?

Ask if the vendor provides tools to track how the model performs across different versions and use cases. This is key for compliance, especially if the model is used in regulated areas like finance, HR, or healthcare.

Auditability should cover both inputs and outputs, with the ability to trace back to a specific session or user. Versioned auditability is central to AI model security and governance.

5. Can I Restrict Access to Internal Knowledge?

If the model integrates with your knowledge base, set limits on what it can access. Not all documents should be available to all users or prompts.

Ask if you can scope access by role, tag content, or apply filters at the model level. Role-scoped retrieval enforces AI security for enterprises without killing usability.

These questions should be part of every AI tool evaluation, not just during onboarding, but whenever you renew or scale up usage.

Where Closeloop Fits In

Most AI vendors offer prebuilt models and a quick path to deployment. But security, context, and long-term control often get left behind. At Closeloop, we approach AI integration services differently as we design for enterprise AI security first, then scale.

We build systems that reflect your data policies, internal workflows, and risk profile. Whether it is a Generative AI assistant, a domain-specific LLM, or a custom automation layer, our architectures embed AI security best practices to make sure your data stays in your environment and under your control.

We design secure architectures that support audit trails, input validation, and role-based access. That includes integration with your existing CRM, ERP, or data warehouse, along with guardrails that limit what users can feed in or pull out.

Our Generative AI services also include vendor evaluation and security advisory. We align deployments to your AI security framework for businesses and regulatory needs. If you are rolling out third-party tools or internal copilots, we help you assess what data is at risk, where to apply restrictions, and how to scale without losing visibility.

Our team works closely with you to define access policies, data flows, and governance models that fit your specific use case. If you are evaluating AI solutions and want to avoid the common security pitfalls, our consultants can help you plan it right from day one. And if you’re already live, we’ll help you close the gaps that most companies miss.

Final Thoughts: You Can’t Ignore This

AI is moving fast, and it’s already deep inside your business. Sales uses it to draft proposals, support teams to manage incoming requests, and internal users rely on it for day-to-day tasks. That shift brings real value. But it also introduces risk you can’t see until it’s too late. This is the moment to adopt a pragmatic AI security guide mindset across teams.

Security in AI is about knowing how tools behave, what they retain, and who has access to what. Treat this as AI security for enterprises, not one-off app reviews. You need clear ownership. You need controls that work at the input and output level. And you need visibility that covers prompts, integrations, model versions, and user actions.

AI systems interact with sensitive data in ways traditional tools were never built to monitor. If your company is scaling AI without updating how it thinks about governance and exposure, risk will build quietly across teams and tools. Build for today and the future of AI security with durable controls.

At Closeloop, we help enterprises close that gap. Our next-gen engineering services are built around secure deployment, controlled integration, and real-world usage patterns. We don’t just develop models; we build trusted systems around them, designed for scale, visibility, and accountability.

Whether you are planning a new AI integration or reviewing what’s already in place, this is the right time to act. Security is part of getting AI right from the start, with clear ownership, governance, and AI security tools for enterprises that fit your stack.

Talk to our AI team about building responsibly with systems that deliver value without exposing your business.

Stay abreast of what's trending in the world of technology

Cost Breakdown to Build a Custom Logistics Software: Complete Guide

Global logistics is transforming faster than ever. Real-time visibility, automation, and AI...

Logistics Software Development Guide: Types, Features, Industry Solutions & Benefits

The logistics and transportation industry is evolving faster than ever. It’s no longer...

From Hurdle to Success: Conquering the Top 5 Cloud Adoption Challenges

Cloud adoption continues to accelerate across enterprises, yet significant barriers persist....

Gen AI for HR: Scaling Impact and Redefining the Workplace

The human resources landscape stands at a critical inflection point. Generative AI in HR has...