Across global supply chains, ad networks, and R&D partnerships, a new kind of conversation is taking shape. Not about how much data a company has, but whether it can collaborate without breaking anything, such as legal agreements, customer trust, or compliance audits.

The intent to collaborate is high. So is the risk.

Enterprises are under pressure to work with partners on shared analytics without surrendering ownership, violating privacy laws, or inviting breach headlines. For years, teams tried solving this with NDAs, exports, hashed IDs, and half-blind joins. It was messy, inefficient, and rarely scalable.

Now, a new model is taking hold: analytics without exposure.

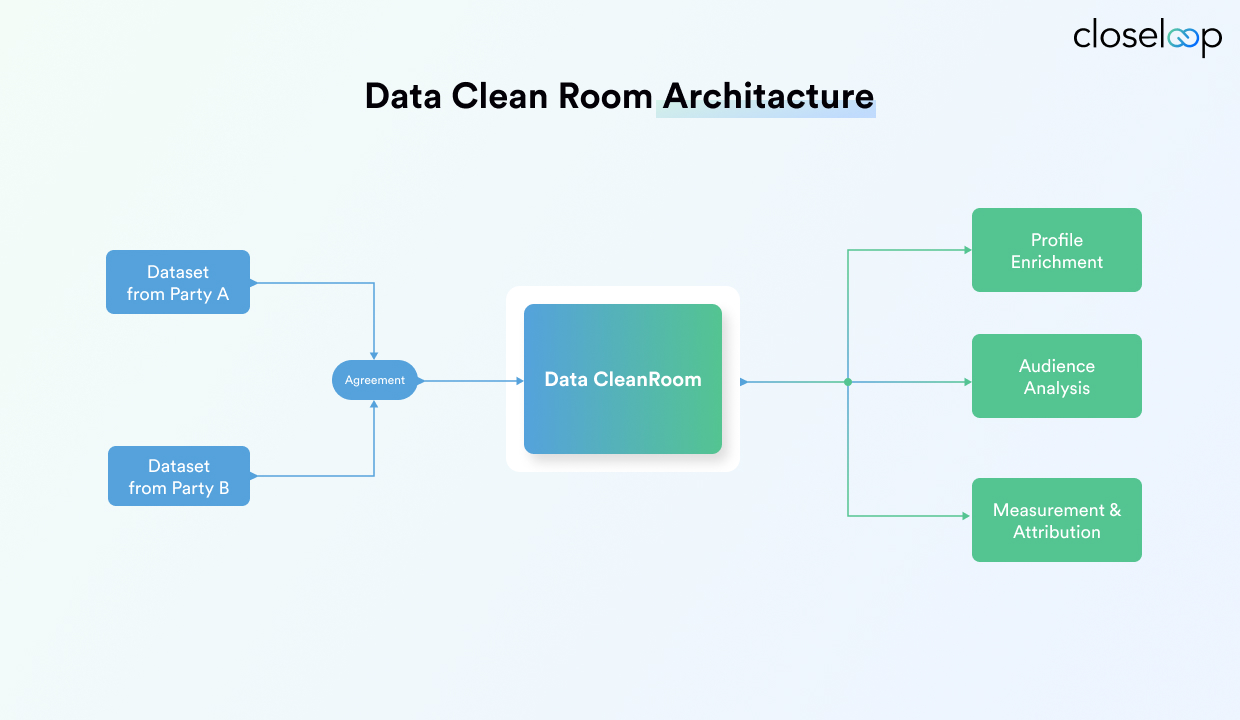

Data clean rooms are designed for precisely this. Two or more parties contribute datasets. No raw data changes hands and everyone queries within a controlled environment, subject to rules that prevent misuse or re-identification. The output is useful insights without legal panic.

For CDOs, CMOs, and operations leaders, this is no longer a niche technology decision. It is a strategic capability. The ability to collaborate across ecosystems without risk is becoming foundational to how modern enterprises operate.

In the sections ahead, we will learn what is a data clean room, what problems they solve, how data clean room platforms like Databricks and Snowflake enable them, and why now is the right time to build a clean room strategy that goes beyond compliance.

Data Clean Room Basics: What It Is and What It Solves

A data clean room is a secure, rule-governed environment where multiple organizations can perform joint analysis on their combined datasets, without either party accessing the other's raw data.

Think of it as a neutral zone. Each participant maintains full control of their source data. Instead of exchanging files or building costly pipelines, parties contribute data into a shared environment under strict usage policies. Analytical queries run within the clean room, and results are privacy-safe, typically aggregated, filtered, or anonymized to prevent any re-identification or misuse.

This data clean room architecture is designed for enterprises that want to collaborate on analytics while minimizing risk. The system ensures that no one can extract granular records, reverse-engineer identities, or see more than they are allowed to.

When Data Clean Rooms Make Sense

Data clean room use cases have gained traction across industries where data privacy is non-negotiable but collaboration is critical. Some common data clean room examples include:

-

Marketing and advertising analytics: Brands analyzing ad effectiveness with publishers or platforms, without sharing customer-level data

-

Healthcare and pharma research: Hospitals and life sciences companies studying patient outcomes or trial results while complying with HIPAA

-

Retail and supply chain planning: Manufacturers and retailers syncing sales and inventory trends without disclosing competitive information

-

Financial services: Banks working with fintech partners to detect fraud while protecting personally identifiable information (PII)

These scenarios all have one thing in common: regulatory exposure and risk. With laws like GDPR, HIPAA, and CPRA in play, moving sensitive data outside your domain, no matter how well-intentioned, is no longer an acceptable tradeoff.

What Makes It Different from Traditional Data Sharing

In legacy setups, organizations often relied on file transfers, data lakes, or third-party aggregators to share data. Even with encryption or hashing, this still meant sending copies to another system, introducing exposure and often losing control over how data was used after delivery.

Data clean room solutions flip that model.

-

No raw data leaves your system: You contribute to the clean room through controlled interfaces or views

-

All usage is policy-enforced: Queries are permissioned, outputs are filtered, and rules are machine-enforced

-

Outputs are privacy-preserving: Results are limited to aggregates or insights that meet minimum thresholds (e.g., 50 users per group)

-

Auditability is built-in: Every action inside the clean room is logged and reviewable

Not Just for Tech Giants Anymore

While cloud-based data clean rooms were first pioneered by media and advertising platforms, modern implementations, especially from Snowflake and Databricks, have made them accessible to any enterprise with privacy-sensitive data and a need for secure collaboration.

For organizations handling customer data, IP, or regulated records, clean rooms are increasingly viewed as a necessary foundation for cross-company analytics. They don’t replace data sharing entirely, but they offer a smarter, safer alternative when risk tolerance is low and control matters.

Why Data Clean Rooms Are Gaining Momentum Now

Enterprise data clean room adoption is not a new idea. But their relevance has shifted from niche innovation to strategic priority. Three major shifts are driving serious adoption across industries.

The Cookie Collapse Has Changed Data Collaboration Forever

The advertising world is being rebuilt in real time. Google, Apple, and Mozilla have all moved to restrict or eliminate third-party cookies. Platforms that once allowed companies to track users across the web are losing their utility and with it, the foundation for much of modern digital advertising.

As brands lose access to behavioral data, they are turning to first-party data to fill the gap. But using it effectively means working with partners, like publishers, platforms, and agencies, without compromising user privacy. Data collaboration in clean rooms makes that possible.

They allow advertisers and media partners to analyze campaign performance, conversion lift, and audience overlap without revealing identities or exchanging sensitive data. For CMOs and data leaders, data clean room benefits are becoming an essential part of post-cookie attribution and audience strategy.

Compliance Pressure Is No Longer Contained to Legal Teams

Laws like GDPR and HIPAA used to feel like compliance checkboxes. Today, they influence architecture. The stakes have risen with stricter enforcement, steeper fines, and growing regulatory complexity. Beyond Europe and the US, dozens of countries have introduced data protection laws that restrict how customer data is stored, accessed, and shared.

In sectors like healthcare, retail, banking, and telecom, even internal data movement is being scrutinized. The idea of sending customer records to a vendor, even if anonymized, is often met with resistance from privacy teams.

Data privacy with clean rooms offers a way forward. By ensuring that data never leaves its origin and that all queries are bound by usage policies, they align well with evolving compliance frameworks. This alignment is critical for CIOs and compliance heads seeking to modernize analytics without creating new liabilities.

First-Party Data Is a Priority But Not in a Vacuum

Most enterprises now recognize the value of first-party data. But collecting it is just the beginning. Making it useful, especially in collaboration with partners, requires more than CRM logs or website clicks. It requires secure environments where insights can be extracted jointly, without data ever becoming exposed.

How data clean rooms work supports responsible data use. They allow marketing, product, and operations teams to collaborate with ecosystem partners while respecting user consent and regulatory boundaries. This kind of privacy-first mindset is increasingly a brand asset, not just a technical standard.

Together, these trends are reframing what it means to share data. Data clean rooms are becoming the default way to collaborate when trust, control, and security are non-negotiable.

Core Capabilities That Define a Data Clean Room

Not all data clean rooms are built the same. While the concept, privacy-first analytics without raw data exchange, remains consistent, the strength of a clean room depends on its underlying controls. These are what make the model legally defensible, operationally secure, and scalable across partners.

Below are the foundational features that define an enterprise-grade data clean room technology stack.

Fine-Grained, Policy-Based Access Control

Clean rooms don’t grant open access. Every query, field, and output is governed by policy. This includes:

-

User-level permissions: Analysts or partners only access the segments they are authorized to work with.

-

Field-level restrictions: Sensitive attributes (like age, income, or geography) can be masked, excluded, or made queryable only in aggregate.

-

Query monitoring and approval: Some platforms allow policy managers to pre-approve query logic or restrict it based on risk scoring.

Clean rooms support multi-organization access while ensuring that no participant oversteps the bounds of what’s agreed. In high-stakes use cases like pharma research or financial data sharing, these controls are essential for data security in clean rooms.

Secure Multi-Party Computation or Output Thresholds

A central innovation in clean rooms is that participants can compute insights across datasets without directly accessing one another’s data. This is made possible through:

-

Secure multi-party computation (SMPC): A cryptographic approach where parties jointly compute a function over their inputs while keeping those inputs private.

-

Aggregation thresholds: Clean rooms often enforce minimum row counts (e.g., “no outputs unless query affects more than 50 users”) to avoid accidental re-identification.

These features allow for trustless collaboration, meaning partners can run shared analysis without needing to trust each other’s data handling practices.

Privacy Mechanisms: Masking, Pseudonymization, Suppression

Data clean rooms apply layers of privacy protection directly to the data before any output is generated. Common methods include:

-

Data masking: Removing or obfuscating sensitive values at rest or during processing

-

Pseudonymization: Replacing user identifiers with hashed or encrypted tokens that can't be linked back without a separate key

-

Row suppression: Automatically removing results that don’t meet privacy thresholds, such as user counts that are too small

These mechanisms are enforced by the system itself, not left to user discipline, another core data clean room benefit. This is what separates a clean room from a shared analytics sandbox or traditional database access.

End-to-End Governance, Auditability, and Lineage

For data clean rooms to stand up in regulated environments, they must provide full transparency into how data is used. That includes:

-

Audit logs: Every query, policy change, and output is tracked with time stamps and user identifiers

-

Data lineage: Clean rooms should provide visibility into where data originated, how it was processed, and what transformations were applied

-

Versioning and change tracking: Especially important for clean rooms used in clinical research, financial reporting, or government analytics

These governance features make clean rooms not just privacy-respecting but enterprise-ready data clean room solutions.

Together, these capabilities allow data clean rooms to serve a growing number of high-trust, high-compliance analytics scenarios. For decision-makers weighing clean room adoption, these are the non-negotiables to evaluate in any data clean room platforms strategy, whether it’s Snowflake’s prebuilt setup or a customizable implementation on Databricks.

Business Use Cases Across Industries

Across sectors, clean rooms are already helping enterprises collaborate on analytics without exposing sensitive data. Below are real-world scenarios that illustrate data clean room use cases and how different industries are using clean rooms to solve long-standing challenges in privacy, performance, and partnership.

|

In Forrester’s Q4 B2C Marketing CMO Pulse Survey, 2024, 90% of respondents use a data clean room for marketing use cases. |

Retail and CPG: Measuring Ad Impact Without Customer Exposure

A large consumer electronics brand wants to assess the effectiveness of its advertising across major publisher networks. Traditionally, this would require sending hashed customer data to each media partner for campaign matching, a risky and compliance-heavy process.

With a clean room setup, both the brand and publisher load their datasets into a governed environment. The publisher contributes impression and clickstream data; the brand contributes purchase and CRM data. Neither sees the other’s raw records.

The clean room computes conversion lift, attribution windows, and audience overlap, all while maintaining strict output controls. This enables the brand to optimize spend and creative strategy without exposing customer identities or violating data handling agreements, an archetypal data clean room example.

Healthcare: Multi-Institution Research Without Compromising PHI

A group of hospitals and a pharmaceutical research team want to analyze treatment outcomes across multiple regions. The goal is to identify how patient responses vary by demographics, comorbidities, and drug dosage.

However, sharing protected health information (PHI) directly across institutions triggers a cascade of regulatory and ethical concerns. Clean rooms address this by enabling a federated model focused on data privacy.

Each hospital uploads anonymized patient data into the clean room with field-level controls in place. The research team defines queries that calculate cohort-level statistics like response rates and side-effect incidence, without ever accessing individual patient records.

This model accelerates medical insights while ensuring HIPAA compliance and institutional data control.

Financial Services: Strengthening KYC Through Collaborative Fraud Detection

A consortium of banks wants to identify synthetic identities and fraudulent account behavior that span multiple institutions. Sharing customer data outright is off the table due to legal and reputational risk.

Using a clean room, each bank contributes customer account metadata, transaction summaries, and behavioral signals. Secure multi-party computation enables cross-bank analysis to flag anomalies, such as coordinated account openings or transaction chaining, without exposing PII, demonstrating how data clean rooms work in high-stakes environments.

The result is a shared intelligence layer that strengthens fraud detection across the network while preserving customer privacy and compliance with data-sharing regulations like GLBA or PCI DSS.

Manufacturing and Automotive: OEM-Supplier Collaboration Without Revealing Competitive IP

A global automotive OEM wants to understand recurring defect patterns linked to a specific class of components. The suppliers hold detailed QA and test data, but are unwilling to share full production logs due to competitive sensitivities.

A clean room allows the OEM to contribute warranty claims and repair records, while suppliers provide fault codes and production batch metadata. Clean room policies ensure that supplier-side details are only used for aggregate analysis, not reverse-engineering or benchmarking, reinforcing data security in clean rooms and partner trust.

Together, they identify the root cause of product failures and improve quality control, all while respecting each party’s data ownership and intellectual property boundaries.

Each of these scenarios highlights a common theme: analytics across boundaries, without compromise. Data clean rooms adapt to the priorities of different industries whether that’s privacy, compliance, IP protection, or partner trust.

Databricks and Snowflake: Two Leading Approaches to Clean Rooms

Enterprises considering data clean rooms today typically find themselves evaluating one of two leaders: Snowflake or Databricks. Both platforms enable secure, privacy-preserving collaboration, but their approach, flexibility, and ideal use cases differ in meaningful ways.

Understanding these differences helps decision-makers choose not just a platform, but a data collaboration strategy that aligns with their long-term architecture, governance model, and business goals.

Snowflake: A Prebuilt, Interoperable Clean Room Model

Snowflake offers clean room capabilities as a native feature within its Data Cloud ecosystem. Enterprises can quickly spin up collaboration environments using Secure Data Sharing, Data Clean Rooms, and collaboration templates.

The experience is designed to be intuitive:

-

Clean room hosts control which data is shared, how it’s filtered, and which fields are visible

-

Participants run permitted queries without ever seeing underlying records

-

Outputs are automatically constrained using minimum row thresholds and privacy filters

-

Access policies are managed through native governance features like object tags and row-level security

One of Snowflake’s strengths is its interoperability. Clean rooms can connect with partners, suppliers, and vendors across the Snowflake network with minimal setup. This makes cloud-based data clean rooms especially attractive to marketing, retail, and media organizations that frequently collaborate with external entities.

It also benefits enterprises that prefer low-code environments or have smaller data teams. With built-in controls and minimal configuration, Snowflake clean rooms are optimized for quick launches and cross-functional use.

Databricks: A Flexible, Open Clean Room Built on Delta Sharing

Databricks takes a more modular, customizable approach to clean rooms. Rather than a single out-of-the-box product, it offers the building blocks for enterprises to construct tailored environments using:

-

Unity Catalog for unified data governance and fine-grained access controls

-

Delta Sharing for secure data exchange across platforms and organizations

-

Native support for complex analytics and machine learning inside notebooks and production workflows

This architecture suits organizations that treat their clean room as an extension of their broader data science and AI infrastructure, integrating privacy transformations and model workflows, an advanced path among data clean room solutions. It allows greater control over how data is masked, which models are run, and how results are produced.

Databricks clean rooms are especially valuable in sectors like healthcare, finance, and advanced manufacturing, where organizations may need to:

-

Build predictive models across multiple data contributors

-

Enforce customized privacy transformations before query execution

-

Integrate clean rooms into their existing Spark or MLflow pipelines

For enterprises with mature data teams and a need for flexibility and performance at scale, Databricks offers a compelling option.

ALSO READ: Databricks vs Snowflake: A C-Suite Guide for 2025

Choosing Between Snowflake and Databricks: Key Differences

|

Feature |

Snowflake |

Databricks |

|

Clean Room Setup |

Native templates, minimal configuration |

Custom-built using Delta Sharing + Unity Catalog |

|

Data Governance |

Built-in with role- and row-level access controls |

Highly granular via Unity Catalog |

|

Interoperability |

Seamless with partners already on Snowflake |

Cross-platform support via open sharing standards |

|

Query Flexibility |

Predefined SQL access |

Supports SQL, Python, notebooks, Spark-based workloads |

|

ML and Data Science |

Limited to SQL-based transformations |

Natively supports ML models and data pipelines |

|

Compute and Cost Model |

Pay-per-use, autoscaling compute |

Fully customizable; costs tied to Spark clusters |

|

Best Fit For |

Marketing, retail, media, partnerships with quick setup |

Healthcare, finance, manufacturing, custom data science |

Choosing the right clean room platform is only part of the equation. Your success also depends on having the right data engineering partner to architect, integrate, and operationalize data clean room platforms at scale.

The Role of Closeloop in Data Clean Room Selection

Many enterprises don’t fall neatly into one category. A retail business may want Snowflake for campaign analytics, while its data science team prefers Databricks for ML personalization. A global manufacturer might need both, depending on whether it's collaborating with suppliers, research teams, or field service providers.

That’s where a partner like Closeloop becomes valuable. Our teams help assess not just technical fit but strategic alignment, accelerating enterprise data clean room adoption. We guide enterprises through:

-

Governance policy design and cross-platform architecture

-

Clean room provisioning, security model configuration, and usage templates

-

Long-term integration with analytics, CRM, and data lake systems

-

Training and adoption for both business and engineering users

We work across Snowflake and Databricks environments, ensuring your clean room strategy complements, not complicates, your broader data ecosystem.

For organizations rethinking data collaboration, the Snowflake vs. Databricks question is about choosing the kind of control, scalability, and extensibility their future demands.

Key Challenges in Data Clean Rooms Adoption

Data clean rooms offer a compelling model for privacy-preserving analytics, but implementation is not without its hurdles. Many enterprises underestimate the preparation and alignment required to make clean rooms work across teams and partners. Below are the most common challenges that surface during adoption, and what they reveal about a company’s clean room readiness.

Governance Misalignment Between Partners

Even the most secure clean room cannot fix a governance mismatch. When two organizations collaborate, each brings its own data policies, legal constraints, and compliance interpretations. If one party permits field-level analysis while the other restricts down to aggregates, consensus on query logic becomes a bottleneck.

Without a shared governance framework, clean room projects stall before they start. Negotiating usage rules, consent boundaries, and policy enforcement must happen early and be built into the clean room itself.

Technical Gaps: Identity and Data Standardization

A clean room is only as useful as the data it receives. If participating organizations store records in incompatible formats or lack a common ID framework, queries won’t produce actionable results.

-

Identity resolution, especially across hashed or pseudonymized datasets, is one of the hardest parts to get right

-

Data standardization issues (naming conventions, date formats, taxonomy mismatches) lead to inaccurate outputs or complete failures

These are not clean room problems. They are foundational data problems that need to be addressed before collaboration begins to realize data clean room benefits.

The Plug-and-Play Fallacy

Despite platform advancements, clean rooms are not turnkey tools. Assuming that a clean room will deliver immediate value without careful configuration leads to frustration. Privacy policies, role-based access, output thresholds, all require upfront design, core to how data clean rooms work effectively.

Treating clean rooms as a checkbox implementation risks weak guardrails, failed audits, or unused infrastructure.

Misalignment Between Business, Legal, and IT Teams

Successful clean room projects require strong coordination. Business leaders define use cases. Legal teams shape access boundaries. IT teams configure the environment and enforce policies.

When these groups work in isolation, adoption suffers. Queries get blocked, outputs are delayed, or the clean room becomes a silo rather than a shared asset. Cross-functional clarity ensures safe scaling of data clean room solutions.

Avoiding these pitfalls starts with a realistic understanding of what a clean room can and cannot solve. The technology is ready. The real question is whether your organization is aligned around using it effectively.

Building a Future-Ready Clean Room Strategy

The architecture matters, but what truly determines success is the groundwork you lay before a single query is run.

Enterprise teams that treat clean rooms as strategic assets rather than tactical integrations build solutions that scale, comply, and deliver measurable value. Below is a checklist to guide that process.

1. What Data Are You Working With?

Start with clarity on the datasets involved. Are you analyzing:

-

Structured data (transaction records, CRM data, campaign metrics)?

-

Semi-structured or unstructured data (logs, documents, transcripts)?

The more complex the data format, the more thought you’ll need to put into schema harmonization and permissible transformations. Clean rooms typically perform best with structured data, but platforms like Databricks can accommodate more flexibility if your use case demands it.

Also consider data volume and update frequency while planning data clean room architecture: two variables that impact performance, compute costs, and refresh strategies.

2. How Will Identity Be Resolved?

Collaborative analytics often depend on linking records across datasets. That makes identity framework design critical. You’ll need to determine:

-

Do both parties use email, phone number, or customer IDs?

-

Are those identifiers hashed or tokenized?

-

Who owns the matching logic and maintains ID maps?

Clean rooms don’t solve identity mismatches; they expose them. Your strategy should include secure, compliant methods for connecting datasets without increasing re-identification risk, central to data security in clean rooms.

3. Who Controls Access and Query Rights?

Define who gets to do what before setting up the environment to operationalize data privacy with clean rooms. This includes:

-

Business users vs data engineers: what kind of queries are allowed, and by whom?

-

Field-level controls: are some data points (like age, income, region) restricted or masked?

-

Output policies: what’s the minimum aggregation threshold before results are returned?

Platforms like Snowflake and Databricks provide mechanisms to enforce this, but only if roles and boundaries are clearly defined upfront.

4. What Standards and Regulations Apply?

If your data contains PII, PHI, or financial records, your clean room must be aligned with:

-

Regulations: GDPR, HIPAA, CPRA, GLBA, PCI DSS

-

Internal policies: data retention limits, auditability requirements, consent frameworks

For example, GDPR-compliant environments may require automatic row suppression or region-based access controls.

5. Are the Right Stakeholders Aligned?

Finally, clean room success depends on cross-functional buy-in. Your strategy should involve:

-

Data governance leaders, to define access and usage rules

-

IT and engineering teams, to implement and scale the architecture

-

Legal and compliance, to validate that safeguards are audit-ready

-

Business teams, to ensure that outputs are usable and valuable

Without this alignment, even the most secure clean room will sit idle, or worse, be misused.

A clean room strategy is an ecosystem. Getting the fundamentals right today

builds the foundation for scalable, secure collaboration tomorrow.

Also Read: Best Practices to Consider in 2025 for Data Warehousing

Closeloop’s Approach to Enterprise Clean Room Solutions

At Closeloop, we help enterprises move from data collaboration intent to secure, operational reality. Clean rooms depend on strong architecture, smart governance, and clear alignment across teams. That’s where our role begins.

Offering expert data engineering services, we start with strategic advisory, helping organizations assess whether Snowflake or Databricks better suits their needs. Some teams prioritize quick activation and partner interoperability; Snowflake’s native clean room features are ideal for that. Others need deep customization, machine learning integration, or complex permission models, use cases where Databricks and Unity Catalog excel.

Our engineering teams support full data clean room implementation, including:

-

Configuration of secure environments

-

Role-based access design

-

Output thresholds and aggregation controls

-

Query template development for internal and partner use

For organizations working across hybrid or legacy systems, we develop custom connectors to bring siloed data into the cloud-based data clean rooms securely, whether it’s on-premise inventory systems, regional CRMs, or supplier portals.

Post-launch, we ensure clean rooms don’t become shelfware. Our services include:

-

Training for analysts, marketers, and data scientists

-

Governance documentation for legal and compliance review

-

Audit log setup to support regulatory readiness

As Databricks certified partners and Snowflake governance experts, we’ve helped enterprise clients design clean room environments that are not only secure but usable, repeatable, and scalable.

Whether you’re experimenting with your first clean room or scaling multi-partner collaboration across business lines, Closeloop can help you build clean room strategies.

Conclusion: Secure Data Collaboration Starts with Clean Room Thinking

Data clean rooms are no longer experimental. They are becoming a core capability for enterprises that need to collaborate across ecosystems without increasing risk or compromising control. What started as a privacy safeguard in advertising is now powering everything from joint R&D in healthcare to supply chain insights in manufacturing.

For enterprise leaders, the window to act is now. As compliance requirements grow stricter and data ecosystems grow more complex, data clean room benefits extend from privacy to performance and partner trust. But success doesn’t come from adopting a tool but from designing the right architecture, aligning stakeholders, and operationalizing governance from day one.

At Closeloop, we help organizations assess readiness, select the right data clean room platforms, and implement data clean room architecture that doesn’t just tick compliance boxes but also supports real business outcomes. Our cross-functional teams bring together platform expertise, custom integration capabilities, and governance-first thinking to ensure your clean room becomes a long-term asset.

Curious to know if your data architecture is clean room ready? Connect with our enterprise data team for a walkthrough.

Stay abreast of what's trending in the world of technology

Cost Breakdown to Build a Custom Logistics Software: Complete Guide

Global logistics is transforming faster than ever. Real-time visibility, automation, and AI...

Logistics Software Development Guide: Types, Features, Industry Solutions & Benefits

The logistics and transportation industry is evolving faster than ever. It’s no longer...

From Hurdle to Success: Conquering the Top 5 Cloud Adoption Challenges

Cloud adoption continues to accelerate across enterprises, yet significant barriers persist....

Gen AI for HR: Scaling Impact and Redefining the Workplace

The human resources landscape stands at a critical inflection point. Generative AI in HR has...